Today, ensuring good performance and responsiveness within our analytics solutions is an indispensable requirement that every system must have. To this end, within the world of analytics and data ingestion with Spark and Delta Lake, we have several strategies when configuring our tables and processes, such as, traditionally, incorporating the use of partitions and applying the Z-ORDER algorithm (not to be confused with Fabric’s own V-ORDER) that either (together or separately), allow us to optimize the performance of our queries.

However, these mechanisms, although very useful (both together and separately), are not without any disadvantages and may not be feasible in all cases. On the one hand, partitioning on sorted tables using Z-ORDER gives us more control over data organization, has better support for parallel writing scenarios as well as allows us to optimize specific partitions in a more granular way (sorting by different criteria each one for example).

However, we must keep in mind that:

- To partition our data, we should choose a column with low cardinality(a date type column usually) , which ensures a data volume of at least 1 GB per partition and avoids creating a very high number of partitions (advisable to keep it below 10,000). Otherwise, you may encounter possible performance problems with partitions that are too small and unevenly distributed

- Regarding Z-ORDER, its use is more oriented to columns with high cardinality, being able to make use of more than one column although each added column detracts power to its performance. In addition, it cannot reorder already sorted files, so if it is necessary to change the chosen keys, it will be necessary to rewrite the table/partition in question. This operation will be even more expensive if what we want to change is the fields by which we partition

- Both require prior knowledge of the query patterns used by users to take full advantage of their functionalities, requiring the columns to be present in the queries made to be able to perform file skipping

- Partitioning is not usually recommended for tables smaller than 1 TB

Given the requirements for each one of them, either together or separately, it is difficult to be able to provide them as an integral part of our solution. However, they are no longer the only option.

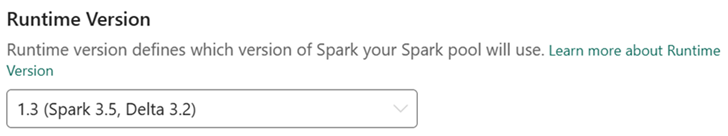

With the arrival of the new Spark runtime to Fabric, 1.3, the version of Delta Lake included in it allows us to apply a new feature that simplifies many of these requirements and enables its application on a wider range of cases, Liquid Clustering.

Liquid Clustering is a new distribution algorithm for delta tables that has greater flexibility and coverage, not being necessary to partition the data and being able to provide a solution to scenarios where the query patterns are not previously known and/or may change over time. With it, it is possible to redefine the columns used without the need to rewrite the data and simplify the implementation of our solutions replacing partitioning and Z-ORDER (which it is not compatible).

It allows us to use up to four columns as keys to cluster by, which can be defined in any order. To get the most out of it, it is recommended to use columns commonly used as query and/or operation filters (such as a MERGE to update data) as well as in scenarios where our tables:

- Have filters on columns with high cardinality

- Have an asymmetric/unbalanced data distribution or where a partitioning key results in too many or too few partitions

- Grow rapidly, requiring maintenance and adjustments

- Have query patterns that change over time

Let’s put it into practice

To use Liquid Clustering, as mentioned above, it is essential to have the Spark 1.3 runtime configured in our workspace:

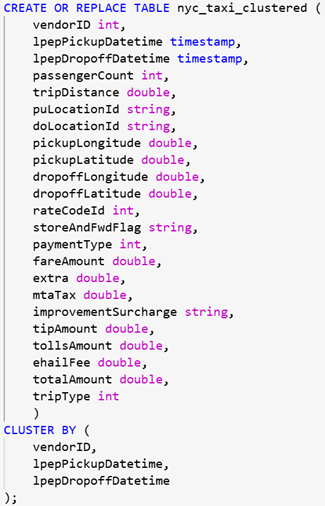

Subsequently, from a notebook and using Spark SQL, we can create the clustered table thanks to the CLUSTER BY option, where we specify the columns by which we want to cluster the table:

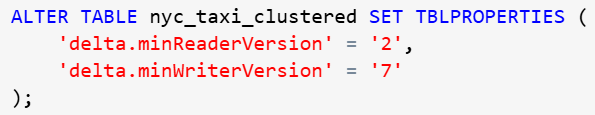

Once the table has been created, it is important to set the delta.minReaderVersion and delta.maxReaderVersion properties to the appropriate version, respectively, so that the table can be correctly recognized by the lakehouse once it has been implemented. Otherwise, the table will present errors and will not be displayed correctly by the explorer.

To configure these properties, we will do it again through Spark SQL:

IMPORTANT: This operation is irreversible

We can check the result thanks to the DESCRIBE DETAIL command:

Conclusions

With Liquid Clustering, we obtain a form of optimization that is easier and more flexible to apply and that can contribute to improving the performance of our solutions, either individually or in conjunction with the other strategies mentioned above, so that we can obtain the best possible result.

It is worth remembering that although Liquid Clustering is not compatible with partitioning and/or the application of Z-ORDER, this does not imply that it is completely exclusive, being able to opt for a specific optimization strategy for each of the tables of our solution according to its characteristics.

As a rule, we can keep in mind the following recommendations for its application:

- For tables with a size of less than 10 GB, it will be more advisable to use Liquid Clustering

- For tables with a size between 10 GB and 10 TB, both approaches can be correct, the deciding factor being the needs and characteristics of each table

- For tables larger than 10 TB, it is more advisable to use partitions in conjunction with Z-ORDER

In any case, it is more than advisable, if feasible, to test all approaches to decide the best option in each scenario, trying to find the best fit for our data and its use, thus contributing to improved query performance and greater efficiency in our operations.