This post will guide you through the essentials of getting started with Microsoft Real-Time Analytics. You’ll discover the foundational components, such as Real-Time Hub, Eventstream, Eventhouse, KQL Databases, etc.., that work together to deliver real-time insights.

By understanding key use cases and practical steps, you’ll see how real-time analytics can quickly enhance customer experiences, optimize operations, and provide a robust platform for data-driven decisions. Whether you’re new to data analytics or looking to expand your capabilities, this guide will show you how to harness the full potential of real-time data to benefit your business immediately.

In today’s fast-paced digital landscape, businesses are constantly generating data from a variety of sources, from customer interactions to internal processes. Leveraging this data in real-time is crucial for staying competitive, improving decision-making, and responding promptly to market changes. Microsoft Real-Time Analytics provides a powerful suite of tools that enables businesses to capture, process, and analyze data as it happens. This immediate insight can drive meaningful actions that add value to your business from day one.

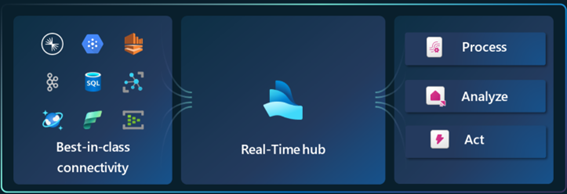

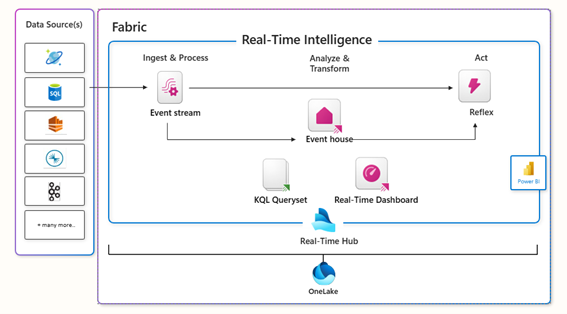

The workflow summary in a Real-Time scenario consists in these steps:

- Data Ingestion: Real-time data from various sources (Cosmos DB, SQL, Event Hubs, etc.) is ingested.

- Processing: The data flows through the Ingest & Process stage, where initial transformations and analysis take place. The element used for this Data Ingestion and Processing is the Event stream.

- Storage and Querying: The processed data is stored in Event House for intermediate analysis. This component stores the processed events temporarily, it acts as an intermediate storage or buffer where data can be queried or further processed KQL queries can be executed to analyze and transform data.

- Hub for Real-Time Management: The Real-Time Hub is a central part of the architecture that enables routing and managing real-time data between components. It connects storage and processing components, facilitating the continuous flow of data.

- Centralized Storage: OneLake stores all processed and historical data, making it accessible for analytics.

- Visualization: Data is visualized in the Real-Time Dashboard or Power BI.

- Action: Reflex is an action component that allows the system to respond automatically to specific events or conditions in real-time. It could trigger alerts, notifications, or automated workflows based on defined rules. For instance, if an anomaly is detected, Reflex could trigger an alert or initiate a corrective action.

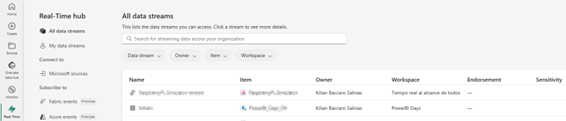

2. Real-Time Hub

The Real-Time Hub is the place where everything related to real-time is in Microsoft Fabric. It helps you connect to the various supported data sources, monitor and manage the organization’s artifacts, and helps you act and react in real time on the data.

The Real-Time Hub consists of three elements: Data streams, Microsoft sources and Fabric events:

• Data streams: This is where you manage all ingestions, transformations and all data destinations in Microsoft Fabric. You can manage everything through event streams with external sources from Microsoft Fabric or with a custom endpoint through Kafka, AMQP or Event hubs.

• Microsoft sources: All sources you have access to can be ingested, managed or maintained directly from the Real-Time Hub. These sources can be Cosmosdb databases, SQL, IoT Hubs, Event hubs, etc…

• Fabric events: Two types of events. Events can be obtained from a Fabric workspace and also from an Azure storage account.

The Microsoft Fabric Real-Time Intelligence team continues to develop and add new data sources, so this will expand and change over time.

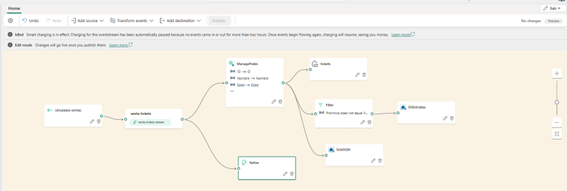

3. Eventstream

In Microsoft Fabric, the Event Stream component is central to enabling real-time analytics by acting as the continuous pipeline that captures, processes, and routes data events as they happen. It’s designed to provide low-latency data processing, allowing organizations to generate actionable insights and trigger immediate responses.

It has a low code environment in which you can drag and drop different elements such as sources, transformations and destinations:

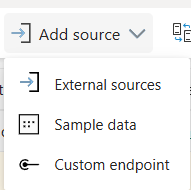

Sources

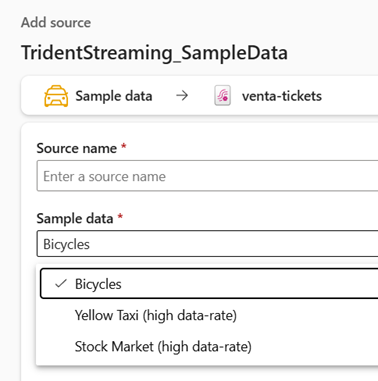

The sources that you have available in the Eventstream are external sources, sample data or a custom endpoint:

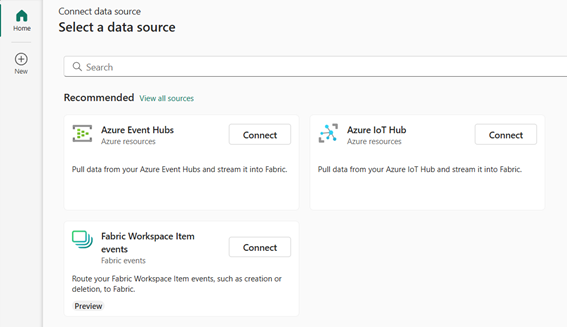

1. EXTERNAL SOURCES

Allows you to connect and stream data from various external systems and services directly into Microsoft Fabric, includes connectors to Azure Event Hubs, Azure IoT Hub, and Kafka, which are commonly used for handling large-scale data streams in real time. The external sources are ideal for organizations with existing data pipelines or IoT solutions that produce high-frequency data. For example, an organization tracking IoT sensor data for manufacturing would connect their IoT Hub directly to Fabric’s Eventstream.

2. SAMPLE DATA

Provides ready-made sample data for testing and experimentation, is pre-configured to simulate specific types of real-time data flows, such as transactional data or clickstream events.

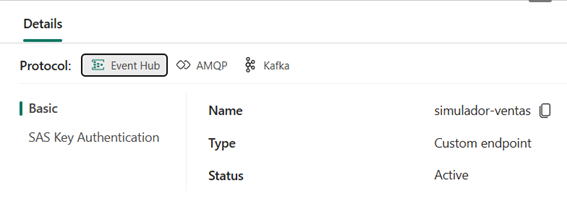

3. CUSTOM ENDPOINT

Enables you to create a custom REST endpoint that can accept HTTP requests and push data to the Eventstream, you can ingest data from almost any system that supports HTTP requests, making it versatile. This is Suitable for custom applications or scenarios where you need to send specific events or data to Fabric in real time. For instance, a web application could send user activity data (like button clicks or page views) directly to Fabric’s Eventstream.

These options provide flexibility to ingest data from established streaming services, use pre-configured sample datasets for quick testing, or set up custom sources for unique or one-off data needs.

TRANSFORMATIONS

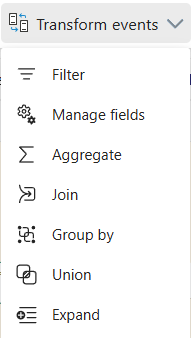

You can apply transformations to the data within the Eventstream before you send it to its destination:

Here’s an overview of each transformation option shown in the previous image:

- Filter: This transformation lets you filter out events based on specific conditions, so only events meeting those conditions proceed in the stream. For example, you could filter to only process events where a temperature reading exceeds a certain threshold.

- Manage Fields: This option allows you to select, rename, or remove specific fields in the incoming data. For example, if your data includes extra fields you don’t need, you can remove them to optimize processing.

- Aggregate: With this transformation, you can perform aggregate calculations on your data, such as summing values, calculating averages, counts, or other statistical measures. It’s useful for summarizing data in time intervals, like calculating the average sales every minute.

- Join: The Join operation enables you to combine two different streams based on a common key. For instance, if you have separate event streams for sales transactions and customer details, you can join them based on a customer ID to enrich transaction data with customer information.

- Group By: This transformation allows you to group events based on one or more fields and then apply aggregations on each group. For example, you could group by region and calculate total sales for each region in real time.

- Union: Union allows you to merge multiple streams into one by combining events from different sources or tables that have the same schema. It’s helpful when you want to process events from multiple streams as a single unified stream.

- Expand: This option is used to «flatten» nested data structures. If a field contains an array or nested structure (such as a JSON array), expand will break it out into individual records, making it easier to work with complex data.

Each of these transformations helps you control and shape the data flow to meet specific analysis requirements, allowing for more flexible and targeted real-time analytics within Microsoft Fabric’s Eventstream.

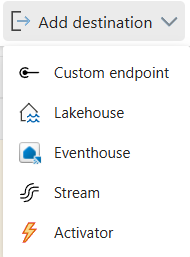

DESTINATIONS

Eventstream allows you to send processed data to various destinations where it can be stored, analyzed, or visualized. Here are the main destination options for Eventstream:

- Custom Endpoint: This option enables you to send data to a custom HTTP endpoint via a webhook, provides flexibility to integrate with third-party applications, external APIs, or any system that accepts HTTP requests. This destination is ideal for custom integrations where you want to route events to another application or service not directly supported by Microsoft Fabric. For example, you could use a custom endpoint to send data to an external CRM system.

- Lakehouse: Allows you to store data in a Fabric Lakehouse, which is designed to handle structured and unstructured data. Lakehouse provides scalable storage and is optimized for large-scale analytics, supporting long-term data retention and analysis. This destination is suitable for scenarios where you need to store real-time data for batch processing, data science, or machine learning tasks. For instance, a retail company could store transaction logs in a Lakehouse to later analyze purchasing trends.

- Eventhouse: An Eventhouse is specifically designed for real-time event ingestion and is optimized for query performance on streaming data. Allows you to store real-time data directly in a Kusto Query Language (KQL) database within Microsoft Fabric.

KQL databases are optimized for fast querying and are particularly suited for real-time analytics workloads, making it easy to work with large volumes of streaming data. You can use KQL for in-depth analysis, anomaly detection, and pattern recognition on the stored data.

This kind of database is ideal for scenarios where you need to run complex queries on the incoming data or retain data over time for historical analysis. For example, a manufacturing company can store sensor data in a KQL database to detect trends and prevent equipment failure.

- Stream: This option allows you to send data from one Eventstream to another, effectively chaining streams together, enables advanced stream processing workflows by breaking down data processing into multiple stages or flows. This is useful for complex workflows where data needs to be processed in stages. For instance, one stream could filter and aggregate data, while another applies additional transformations or enrichments.

- Activator: The Activator destination is used to trigger actions based on real-time data conditions or thresholds, enables automated responses or alerts when specific events occur in real time. This is indicated for alerting or automating responses based on data conditions. For example, a security company might use the Activator to detect suspicious activities and automatically trigger alerts or actions when certain thresholds are met.

These destinations offer a range of storage, integration, and processing options, allowing you to choose the right destination based on your real-time data analytics needs.

4. Eventhouse

The Eventhouse is a fundamental component for managing and processing data in real time. It supports unstructured, semi-structured and structured data. Essentially, it provides an optimized structure for handling highly continuous event streams, which is crucial in applications that rely on real-time data, such as fraud detection or IoT monitoring.

mAIN fEATURES

The Eventhouse is a fundamental component for managing and processing data in real time. It supports unstructured, semi-structured and structured data. Essentially, it provides an optimized structure for handling highly continuous event streams, which is crucial in applications that rely on real-time data, such as fraud detection or IoT monitoring.

OPTIMIZED FOR DATA FLOW WITHOUT USER INVENTION:

Eventhouse is designed to ingest real-time data in a highly efficient manner, with storage optimized to maintain minimal latency. No additional configuration is required to optimize or improve performance; simply create it and it is immediately available and ready for use.

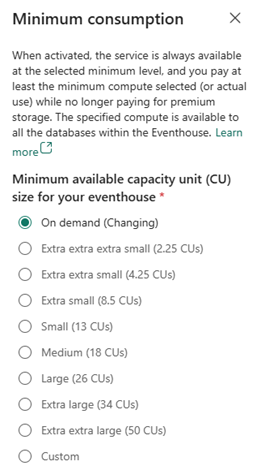

SERVERLESS

Eventhouse is designed to optimize costs by automatically suspending the service when not in use. This means that, in the absence of incoming data flow, the computation of KQL queries is temporarily stopped. When reactivated, a latency of a few seconds may be experienced. For environments where this latency is critical, it is recommended to enable the minimum consumption setting, which ensures that the service is always active at a predefined minimum level until data flow resumes and standard consumption is restored. The following image shows the different minimum consumption levels available:

HOT AND COLD CACHÉ

Eventhouse offers two modes of data storage: hot tier and cold tier. To ensure optimal query performance, the cache, or hot tier, stores data on SSDs or even RAM. However, this high performance comes at an additional cost, comparable to the premium tier of Azure Data Lake Storage, due to the higher price of caching.

Data located on standard or cold tier storage is cheaper, but slower to access. It is essential to define an appropriate cache policy to move data that does not require frequent analysis or is not critical to the standard storage. On the other hand, keeping highly relevant data in cache ensures its availability for immediate and near real-time analysis.

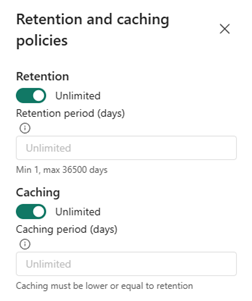

DATA RETENTION AND CACHING POLICIES

The retention policy manages the automatic deletion of data in tables or materialized views, which is especially useful for removing continuous data whose relevance decreases over time.

The implementation of a retention policy is essential when ingesting data on an ongoing basis, as it allows you to manage costs efficiently. Data that exceeds the period defined by the retention policy is considered eligible for deletion. However, there is no exact guarantee as to when such deletion will take place; data may remain even after the policy is activated.

In general, the retention policy is set to limit the age of the data from the time of its ingestion.

To optimize query performance, a multi-level data caching system is implemented. Although data is stored in secure and reliable storage, a part of it is cached on processing nodes, SSDs or even RAM to ensure faster access.

The cache policy allows you to specify which data should be kept in cache, differentiating between hot and cold data. By setting a cache policy for hot data, hot data is kept on local SSD storage, which speeds up queries, while cold data remains on reliable storage, which is cheaper, but slower to access.

Optimal query performance is achieved when all ingested data is cached. However, not all data justifies the cost of being kept in cache in the hot data layer.

KBL DATABASE

Eventhouse works with KQL (Kusto Query Language) databases. A KQL database is a type of database optimized for real-time analysis of large volumes of data, using KQL, a query language developed by Microsoft to perform data search and analysis in services such as Azure Data Explorer and Microsoft Fabric.

An Eventhouse can contain several KQL databases.

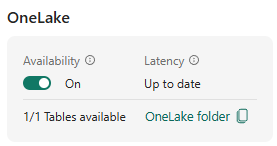

ONELAKE AVAILABILITY

One of the most important features of KQL is the availability in OneLake. By activating this feature, KQL data is available in OneLake and you can co-consult the data in Delta Lake format via other Fabric engines, such as Direct Lake in Power BI, Warehouse, Lakehouse, Notebooks, etc.

You also have the possibility to create a OneLake shortcut from a Lakehouse or Warehouse.

Note that this feature avoids writing many small Parquet files, which can delay write operations by hours if there is not enough data to create optimal Parquet files. This ensures that the Parquet files are optimal in size and there are no performance problems when querying them.

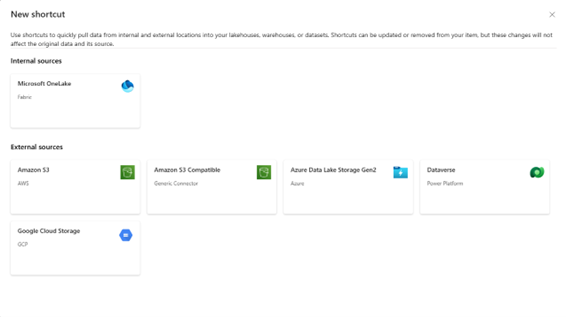

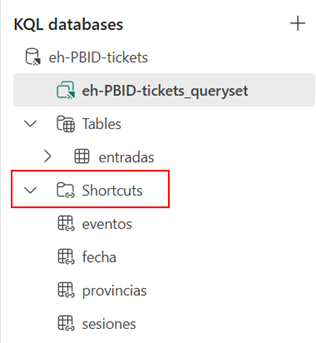

SHORTCUT

Shortcuts are references within OneLake that point to locations in other files without moving the original data. In a KQL database, you can also create shortcuts that point to external or internal Fabric sources.

Once the shortcut has been created, it is referenced in the data query by the external_table() function

external_table(‘customer’)

| count

ventas

| join kind=inner external_table(‘date’) on $left.DateKey == $right.DateKey

| join kind=inner external_table(‘store’) on $left.StoreKey == $right.StoreKey

| project OrderKey, OrderDate, MonthShort, StoreCode, CountryName

Currently, the allowed shortcuts are:

- OneLake

- Azure Data Lake Storage Gen2

- Amazon S3

- Dataverse

- Google Cloud Storage

KQL QUERYSET

KQL Querysets with the Kusto Query Language are used to perform queries on data in a KQL database.

Kusto Query Language (KQL) is a query language developed by Microsoft to work with large volumes of data, especially in real-time analytics contexts. It was created to interact with databases such as Azure Data Explorer and now Microsoft Fabric, which are designed to store and analyze massive time series data, telemetry events, application logs and other data generated in real time.

ventas

| where OrderDate >= ago(7d)

5. REAL-TIME DASHBOARD

The collection and processing of real-time data is as crucial as the tools to visualize that information. Real-time dashboards give users the ability to interact with their data instantly.

Each tile in these dashboards is linked to KQL queries, which can be edited and configured to optimize data visualization. In addition, they include parameters, filters and navigation functions to explore data in greater detail.

As the visual charts mirror the data stored in Eventhouse and take advantage of low-latency data availability, the dashboards are ideal for scenarios where data exploration must be immediate and KQL expertise predominates.

6. REAL-TIME ARCHITECTURES

In Microsoft Fabric, there are several real-time data architecture options for Power BI and other services, each designed to handle different types of real-time analytics requirements.

For these scenarios we are going to assume that we have our dimensions tables in a Lakehouse and the fact table is coming in real time and is processed by our Eventstream.

Here’s an overview of some common architectures.

DIRECTLAKE MODE IN POWER BI

DirectLake mode in Power BI is a feature that allows Power BI to connect directly to a data lake (like Fabric’s Lakehouse) without needing to import or pre-aggregate the data.

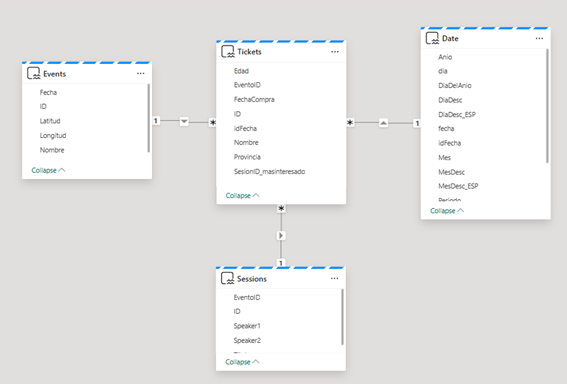

For this example, the destination of our Eventstream is going to be a Lakehouse and we are going to connect with DirectLake mode in Power BI to query our dimensions tables and our fact table. This is the semantic model:

We have our fact table Tickets that is being loaded from our Eventstream storing the data in our Lakehouse.

This architecture enables near-instant access to large volumes of data with low latency because it bypasses the data import process. This mode also provides the flexibility of directly querying real-time data stored in Delta Lake format in a Lakehouse. However, a potential drawback of this approach is that loading the fact table in the Lakehouse via Eventstream will generate numerous small files. To maintain optimal performance, you’ll need to implement a maintenance process to consolidate these small files into larger ones, as excessive small files can degrade performance.

MIXED MODE WITH IMPORT AND DIRECT QUERY TO A KQL DATABASE

Mixed mode combines both Import and DirectQuery in Power BI, allowing some data to be imported (cached for fast performance) while other data is queried in real-time directly from a KQL database (Kusto).

For this example, the destination of our Eventstream is going to be a KQL Database, we are going to connect with DirectQuey mode in Power BI to query our fact table in the KQL Database, and for the dimensions we are going to use Import mode, to get the tables from our Lakehouse. This is the semantic model:

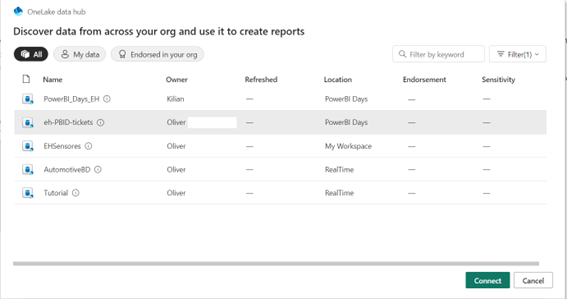

To connect to a KQL Database click on Get Data:

Search for a KQL Database:

Select your KQL Database and click Connect:

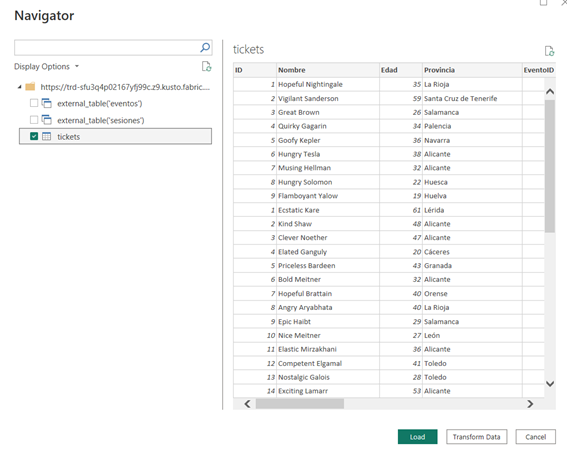

After that, you can select your table and click Load or Transform Data if you want to make some changes:

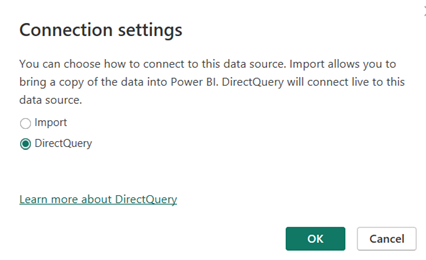

Select DirectQuery mode and click OK:

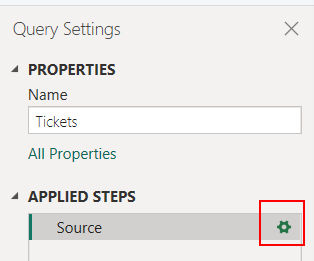

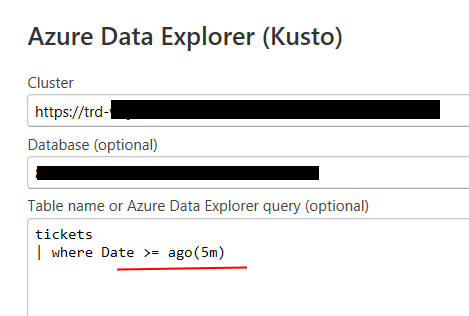

And then, you can do some personalization in your query, for example, if you only want the tickets for the last five minutes, you can click on Transform data:

Select your table in the left panel, and then select then engine icon in your right panel:

After that, you can change your query to load only the last five minutes, using KQL syntax:

This approach provides the flexibility to use Import for frequently used data, ensuring high performance, while accessing live data via DirectQuery when it’s critical to have up-to-the-moment information.

The advantages of this architecture are that, by using the KQL database, which is optimized for real-time data queries, it provides a lot of flexibility for querying and analyzing data with excellent performance, allowing you to see results almost instantly in your report in Power BI.

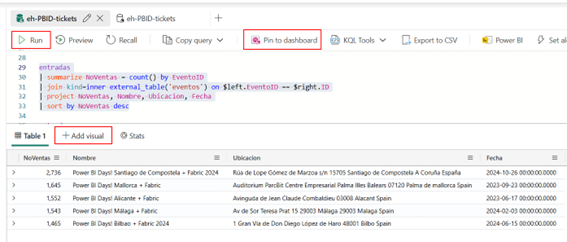

Real Time Dashboard

With this approach you can create a Real-Time Dashboard directly pinning KQL queries, in this example we are going to pin some queries to show you some examples and also we are going to create shortcuts in the KQL Database to get our dimensions from our Lakehouse and then, join our fact table from the KQL Database with our dimensions from our Lakehouse.

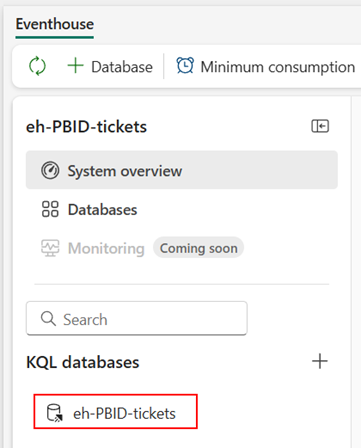

To do that, first we are going to the Eventhouse in our Fabric workspace, and then we are going to click in our KQL database:

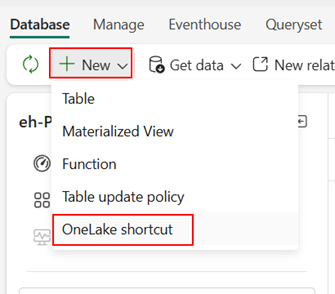

Once we are in our KQL Database, we are going to add our Shortcuts to get the dimensions tables, for that, click on New -> OneLake shorcut:

Then, we select our Lakehouse and select the table, once you added the table it will show in the Shortcuts dropdown menu:

And then, you are ready to write some queries and pin them to your Dashboard.

In this example we are going to write a query to get the tickets joining with our events dimension table, and summarize to count the number of tickets sold in each event:

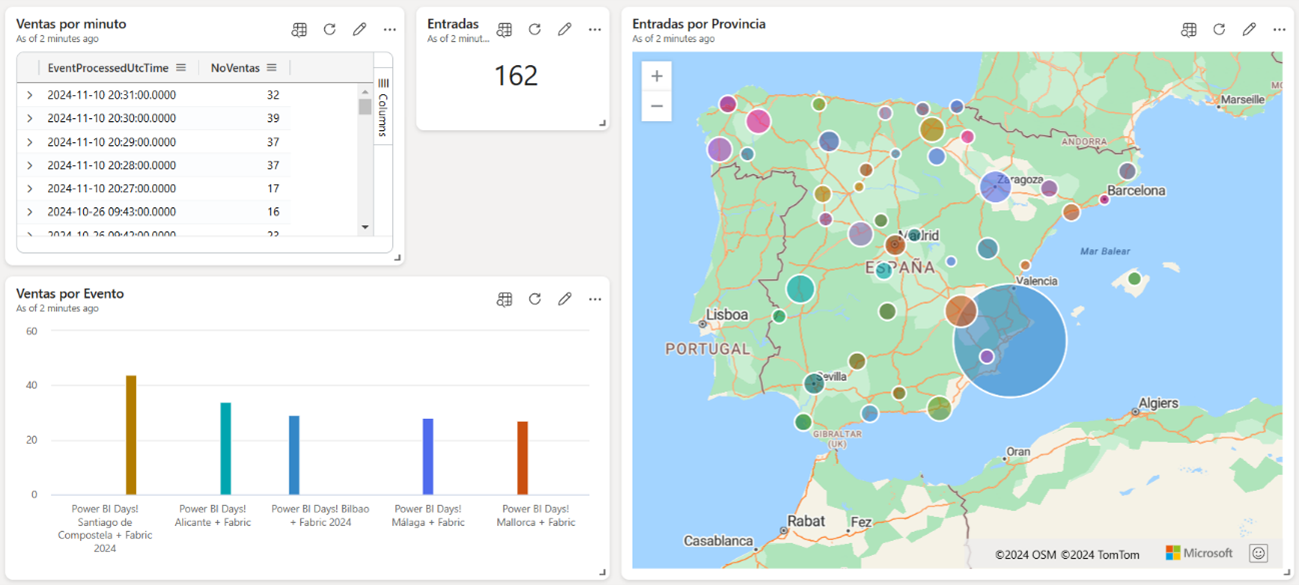

Select the query and click on Run to see the results, and after that you can click on Pin to dashboard, you can change your visual by clicking on +Add visual if you want to change it to another one, for instance, a map or a column chart.

And this is the result of pinning some queries to our Real-Time Dashboard:

This method is suitable for monitoring scenarios where real-time feedback is essential. For example, a logistics company might use a streaming dataset to monitor vehicle locations in real time, while storing historical data for later analysis with hybrid streaming.

Choosing the right architecture

- For near-instant analytics with data already stored in a Lakehouse: DirectLake Mode is often the best choice.

- For a balance of real-time insights and performance with some data cached: Mixed Mode (Import + DirectQuery) provides flexibility for dashboards that need both fast historical data access and real-time updates.

- For ultra-low-latency visualizations or monitoring dashboards: Real-Time Dashboards with push or streaming datasets are ideal, especially for monitoring operational metrics like IoT data, livestock prices, or social media trends.

Each of these architectures can be chosen based on your latency requirements, data volume, storage needs, and overall dashboard performance expectations.

Conclusion

Implementing Microsoft Real-Time Analytics provides a powerful way for businesses to unlock value from their data instantly. By setting up real-time ingestion, processing, and visualization workflows, organizations can make timely decisions, optimize operations, and respond proactively to market demands. Whether you choose to leverage Eventstream for continuous data flow or KQL databases for fast querying, the flexibility of Microsoft Fabric enables you to tailor solutions that align with your unique business needs.

As data volumes grow and the demand for immediate insights intensifies, Microsoft Real-Time Analytics is well-equipped to handle the challenges of today’s data-driven landscape. With the right configuration, you can transform raw data into actionable insights from day one—empowering your organization to stay ahead of the curve, enhance customer experiences, and drive meaningful outcomes. Now is the time to embrace the power of real-time data and harness its potential to shape the future of your business.