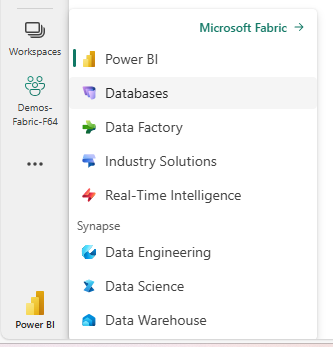

One of the features that were in Private Preview, and that have seen the light of day in Microsoft Ignite, is the possibility of creating databases in Microsoft Fabric. This is a database engine based on the SQL Server engine, like Azure SQL, which will allow us to have a truly unified data platform, enabling us to host operational workloads also in MIcrosoft Fabric, extending the SaaS concept of Microsoft Fabric to transactional database systems.

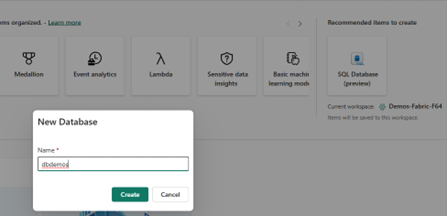

The creation of a database is as simple as the creation of any other Fabric item. Once the option is activated at Fabric tenant level, since it is still a preview option, the possibility of creating a new database will appear, to which we only have to give it a name.

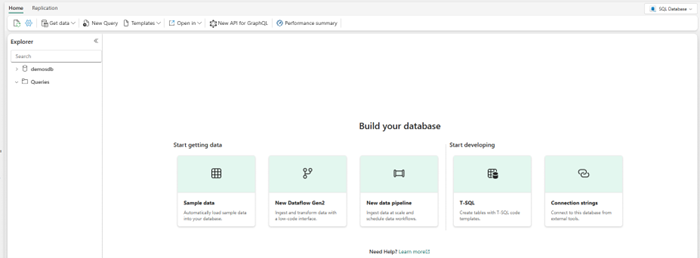

Once the database is created, the Editor opens, where we can see all the available options for data loading and solution development.

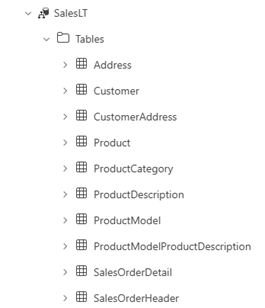

We can load scripts for object creation, or we can load data using dataflows or Data Factory pipelines, in addition to being able to connect with external tools such as SQL Server Management Studio or Azure Data Studio, as well as having a GraphQL API that we can configure to access the objects we have within our database. For the rest of the article, in this case we have loaded the example data, which loads a SalesLT schema with a subset of tables and data from AdventureWorks.

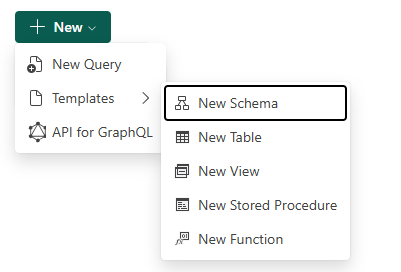

From a ‘development’ point of view with T-SQL, we can create queries, or rely on templates for the creation of the most common objects.

Data Storage

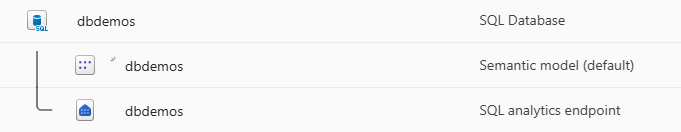

As with the other storage options, we will also have a Semantic Model and a SQL Analytics endpoint to analyse and exploit the data stored there:

But… Why do I need a SQL analytics endpoint when I can already launch native SQL queries to my SQL engine?

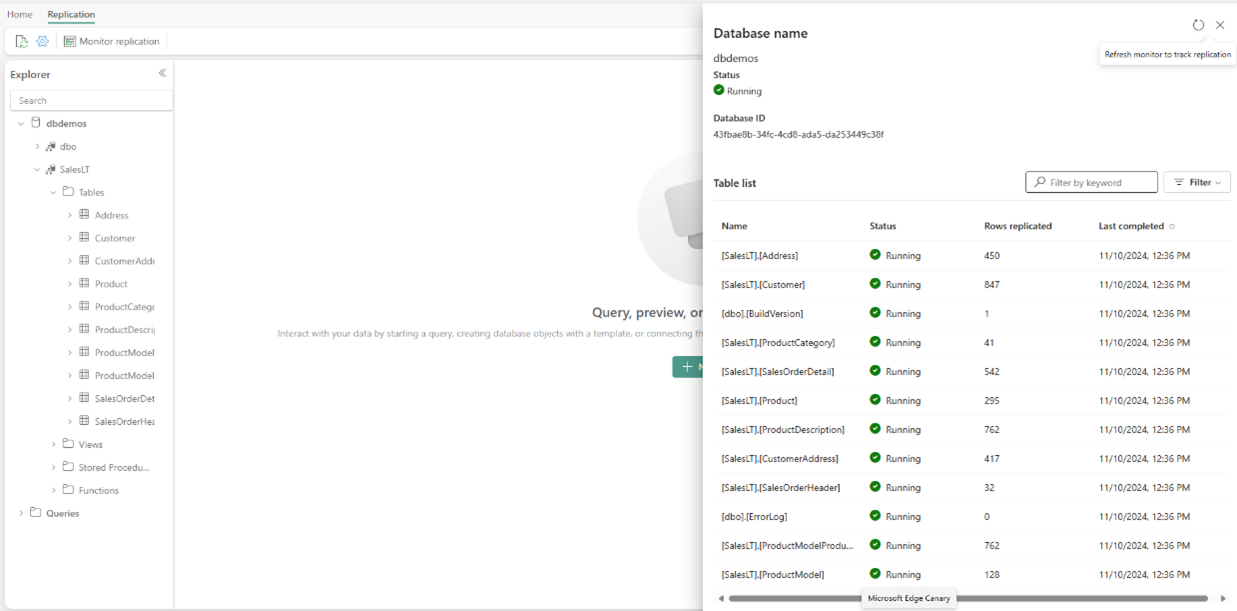

I’m sure that those of you who are curious have already noticed that in the Database Editor, there is a Replication menu in which if we check we can see:

In other words, the data is being replicated to Delta tables that I will be able to query from that SQL Analytics endpoint, so we have, without the need to configure anything, the ability to launch T-SQL queries to the SQL engine for real-time response of the data, and obviously, to make modifications to it if we need to:

• The possibility of launching T-SQL queries to the SQL engine for a real-time response of the data, and obviously, to make modifications to the data if we need to.

• A SQL Analytics Endpoint, designed for more analytical queries, which may not have the latest real-time data, and which enables us to have a Semantic Model in Direct Lake format against this replicated data.

All this is evident if we review the Onelake Explorer and see the structure that is created for each database:

Setting Up the Databases

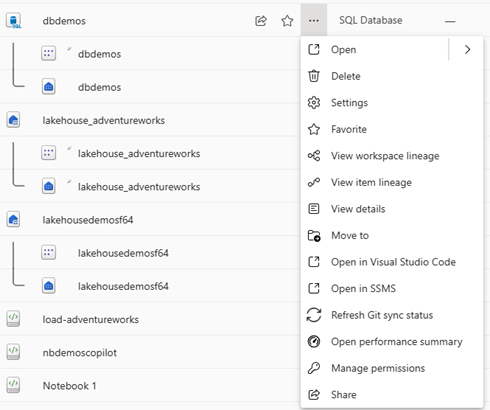

If we look at the options available at the item level, in addition to those already mentioned, we see that we have the option to synchronise the Git status, since we have automatic support for CI/CD through the database projects, in order to automate the passes between environments where we have databases.

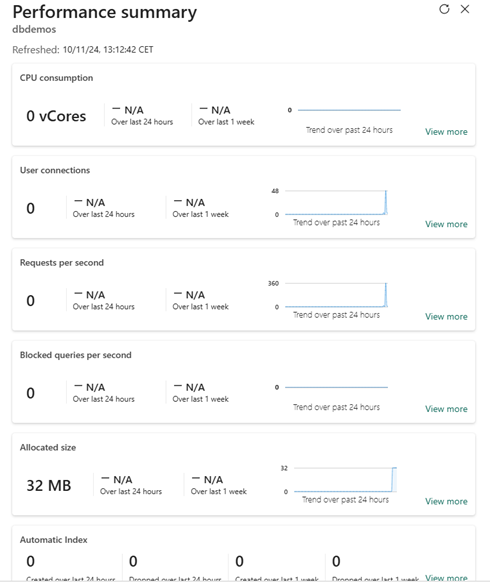

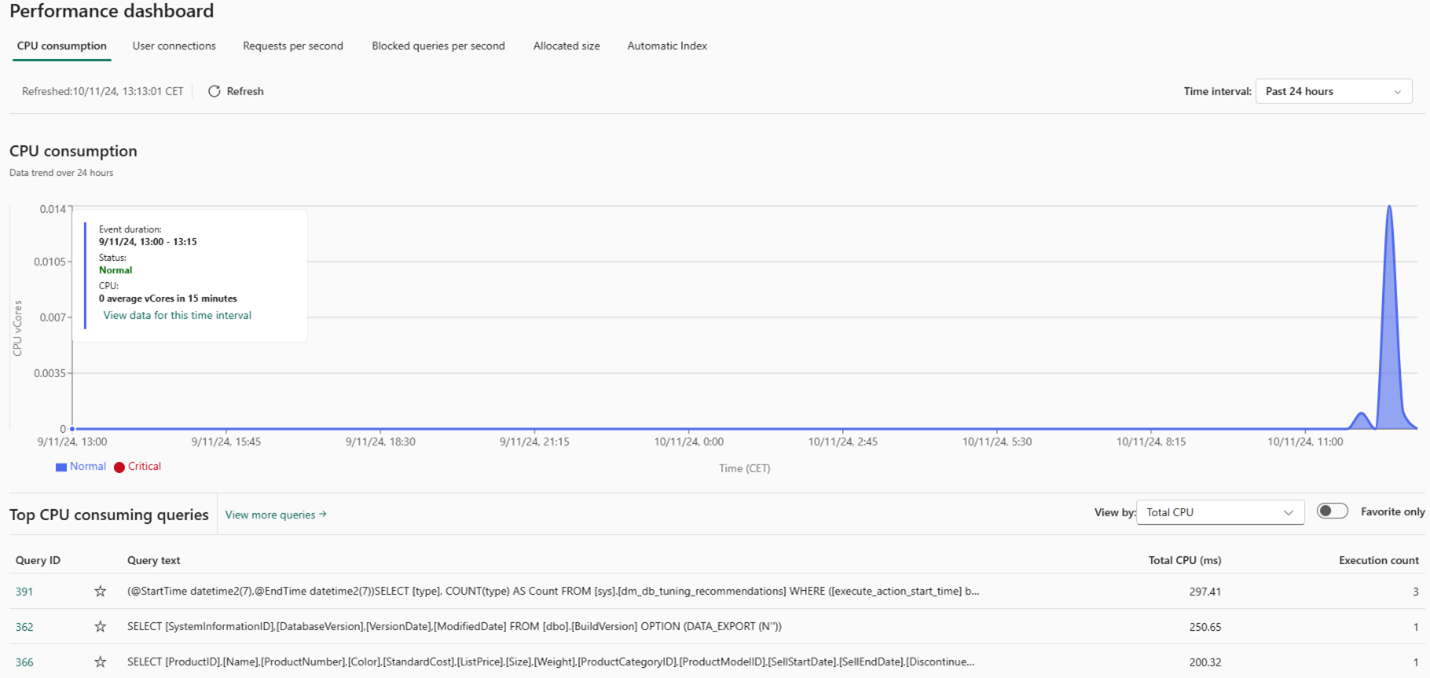

Another relevant aspect is the ‘Performance Summary’. Through this option, we will be able to see the performance data of our database, as well as the consumption of resources.

But not only that, we also have a performance dashboard from which we can see all the details.

The SQL engine incorporates all the auto-scaling and auto-indexing capabilities available in the SQL Server engine, but it is always convenient to be able to review the performance of our solutions.

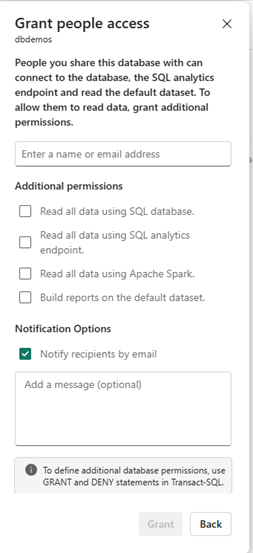

Security

Like any other Microsoft Fabric item, we have options to share access to the databases and configure a series of permissions, which, as can be seen, are oriented towards access through the different endpoints that are exposed, in addition to access to the Semantic Model that is created by default.

Obviously, we have at our disposal all the management of roles, read permissions, write permissions, etc… That we can manage through T-SQL commands, as in any database with SQL Server engine.

Additionally, the data is stored encrypted, ensuring its privacy, as well as being able to use the labels available in Fabric to catalogue our data.

Scenarios

Beyond the forecasts of large consulting firms, which say that by 2028, data platforms will be unified (transactional and analytical) and will be governed by AI, this possibility of having SQL database in Fabric, opens the door to different scenarios, such as:

• Manage master data for analytical solutions, in a completely integrated and simple way, instead of having to load masters in Excel or similar.

• Providing reverse-ETL solutions, where we enable the modification of analytical data and can concentrate that data in SQL endpoints.

• Development of departmental applications, oriented to processes with a large analytical component, and not many requirements.

This is really an integration that can open the door to many other scenarios and simplify data management in companies of all sizes.