The revolution that Generative AI is bringing about means that, in recent months, requests from our customers for solutions that allow them to consult information on their data platforms, in a conversational way, have been accelerating. Within the strategy of providing all its tools and solutions with Copilots, Microsoft also includes a Copilot within Microsoft Fabric, with different experiences such as Copilot for Power BI, oriented to business users or analysts, Copilot for Data Factory, or Copilot for Fabric consumption (you have here the complete list of Copilots https://learn.microsoft.com/en-us/fabric/get-started/copilot-fabric-overview ).

These Copilot experiences are focused on helping us to perform some tasks within the platform, focusing on specific roles and features. AI Skills, however, allow us to configure how we want it to behave (through specific instructions and examples) and it operates ‘independently’ and is not associated with a specific workload or feature.

To create our first AI Skill, we need to meet a number of requirements, which as of today are as follows:

• We need a Test version of Microsoft Fabric, in a region that has all the workloads.

• We need to enable, at tenant level, the ability to generate AI Skill, the ability to use Copilot and the ability to share AI data across regions.

• Data, in a warehouse, or a lakehouse, since, for now, it is only possible to generate T-SQL code.

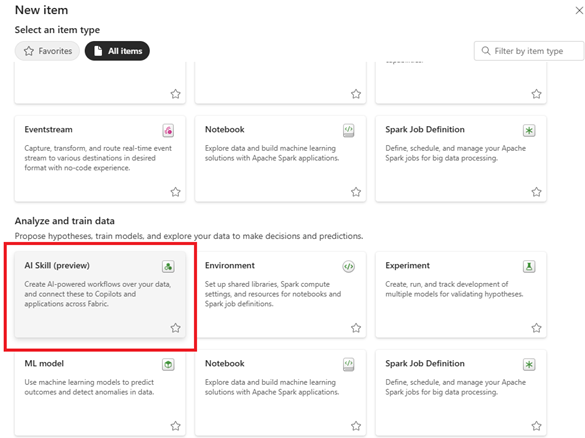

If these requirements are met, then when creating a new item, the option to create an AI Skill will appear, as shown in the image:

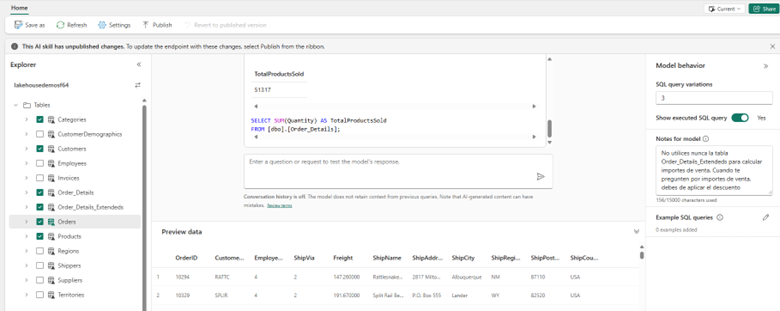

When naming our AI Skill, we must then take into account four relevant aspects:

• Which data we want to use. In the Explore of our lakehouse / warehouse, we can select which tables we want our AI Skill to take into account to solve the questions we can ask it.

• Instructions. Using Meteprompting techniques, we can provide our AI Skill with instructions on how we want it to behave in order to solve the user’s questions.

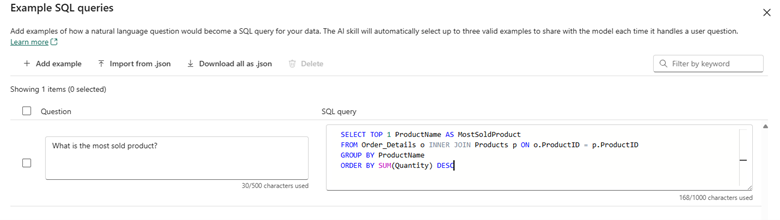

• Examples. Using a well-known prompts engineering technique, such as few-shot-learning, we can give it a series of examples of how we want the user’s questions to be solved.

• Variations of the query. With this parameter, which by default is set to 3, we control how many variants of the query the AI Skill generates to evaluate and decide the final query.

Let’s look at a concrete example of how we can modify the behaviour of our AI Skill, through these configurations. We start from a lakehouse that has the data from the Northwind demo database, loaded from this OData service: https://services.odata.org/V4/Northwind/Northwind.svc/

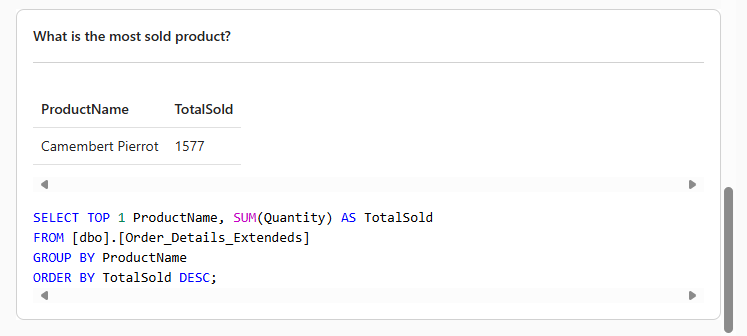

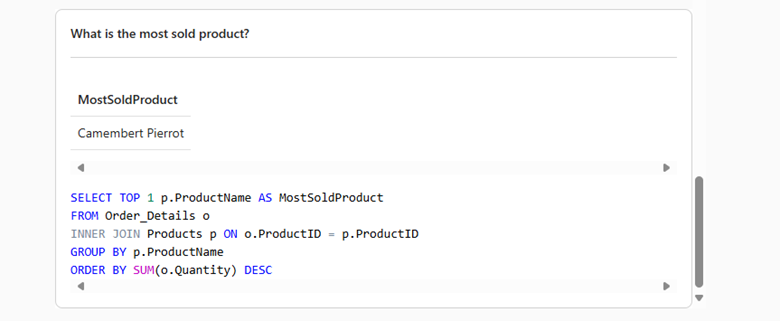

From this data, selecting the tables shown in the image above, we ask the Skill the question What is the most sold product? Perform a query with the extended table containing all the sales information to get it.

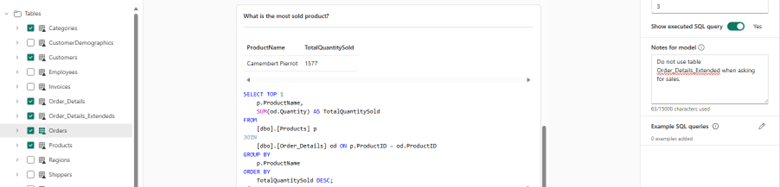

But if we specify it not to use this extended table, it does a join in order to get the product name.

Similarly, if we edit the examples and add an example like the one in this other image, so that it returns only the name:

We can see how it modifies the query we have given as an example:

Obviously this is only an illustrative example to see how it works, because we could obtain the same result either by extending the instructions or by being more specific in the query. However, one of the objectives of AI Skills is precisely that users do not need to be excessively specific, and that we can use these metaprompting and few-shot-learning techniques so that the Skill is able to answer queries appropriately.

Publishing AI Skills

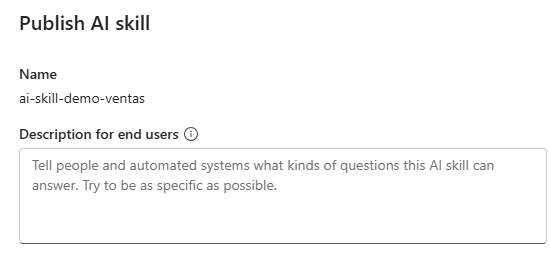

Once we have our AI Skill correctly configured, in order to share it with other users, we need to publish it:

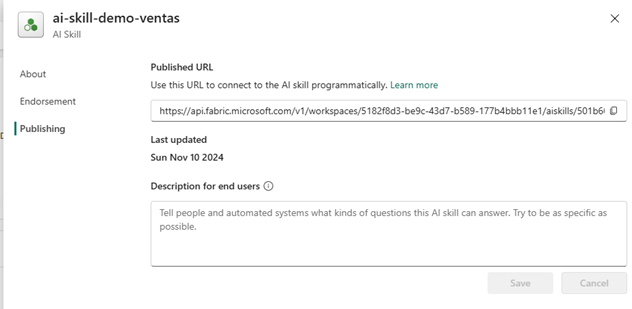

Once published, we have at our disposal an endpoint to use AI Skills programmatically, so we can use it from a Fabric notebook, or from any other application external to Microsoft Fabric.

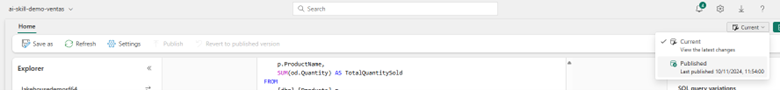

In addition, we can continue to refine our AI Skill without impacting the published version, as this selector will have been enabled to allow us to modify which version we want to see/modify.

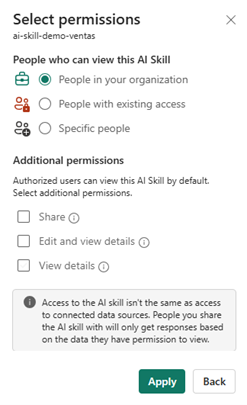

We can obviously configure the access permissions to the AI Skill item, which will be the permissions that will also apply to the generated Endpoint. For a user to be able to consult the AI Skill, we need to share the item, but we do not have to indicate any other permissions, which are intended for other users to be able to share the AI Skill, or to view and modify the details of the Skill.

Obviously, as can be seen in the image, users using the AI Skill are also subject to the permissions of the data sources on which the Skill is applied.

News Announced by Ignite

What’s new now? During Ignite, two very interesting new features related to AI Skills have been announced:

• In addition to SQL EndPoints for Data Warehouse, and Lakehouse, AI Skill will also support Semantic Models and Eventhouse. In other words, in addition to generating SQL code, it will also be able to generate DAX and KQL to answer analysts’ questions, including those questions that need access to more than one of these sources!

• It has also been announced that it will integrate directly with the new Azure AI Agent Service, the new service, also presented this week, to bring together Generative AI developments based on agents, so that it will be possible to use the AI Skills created in Fabric, as functions or tasks of these agents, whether we are creating them from Copilot Studio, as if we do it from Azure AI Studio.

As we can guess, this is a feature focused on providing us with the possibility of activating Generative AI on the data we have in our Onelake, giving us the ability, through metaprompting and few-shot-learning, to improve the answers Copilot can give us in certain scenarios, as well as being able to create as many AI Skills as we want, focused on specific areas or analysis.